OVS基于DPDK的数据通路绕过了内核协议栈,并且做了很多优化,带来了一定的性能提升,但也引入了很多需要配置的参数,而且这些参数和性能密切相关。

参数说明

ovsdb 官方文档 ,下面的参数表格只是简单翻译了一下作用,仅供参考,具体信息还得看官方文档

ovsdb中存储着OVS所有配置信息,并按结构划分为一张张表TABLE,这里只关注其中与dpdk有关的配置参数。

Open_vSwitch Table

参数

说明

other_config:dpdk-init=true是否启用dpdk数据通路

other_config:dpdk-lcore-mask=0x1指定在哪些core上创建lcore线程,该线程用于dpdk库内部消息处理,如日志等;若没有指定,则默认为cpu affinity list中的第一个。为达到最佳性能,最好将其设置在单个core上

other_config:pmd-cpu-mask=0x30设置在哪些core上创建pmd线程;若没有指定,则默认在每个NUMA节点上创建一个pmd线程,并绑定到对应NUMA的core上

other_config:smc-enable=true是否启用SMC缓存,相对于EMC,内存更高效;当流表的数量超过8192时,SMC尤其有用。默认为false

other_config:per-port-memory=true是否使用每端口内存模型;默认有相同MTU和CPU socket的设备共享内存池

other_config:dpdk-alloc-mem指定预先从hugepage上分配的内存大小,单位为MB,与socket(cpu)无关;推荐用dpdk-socket-mem指定

other_config:dpdk-socket-mem="1024,1024"指定预先从hugepage上为每个socket(cpu)分配的内存大小;值用逗号分割,如对4个CPU socket的系统,”1024,0,1024”,表示为第0和第3个CPU分配1024Mb。dpdk-alloc-mem和dpdk-socket-mem都没有指定的话,会为每个NUMA用DPDK库中设定的默认值。若同时指定了,则采用dpdk-socket-mem。修改后,需要重启守护进程才生效

other_config:dpdk-socket-limit限制每一个socket(cpu)最大可用的内存大小(上面的dpdk-socket-mem可动态扩容)。值用逗号分割,如对4个CPU socket的系统,”2048,0,0”,值为0表示不设限制。未指定时,默认为不设限制

other_config:dpdk-hugepage-dir指定hugetlbfs挂载点的路径,默认为/dev/hugepages

other_config:dpdk-extra为 DPDK 指定其他 EAL 命令行参数

other_config:vhost-sock-dir指定为vhost-user生成的socket文件路径,该值为基于external_ids:rundir的相对路径。未指定时,则生成在external_ids:rundir目录下

other_config:tx-flush-interval=0指定一个数据包在output batch中可等待的最大时间(为了凑足32个批量发送),单位微秒us;该值可调节吞吐率和网络延迟的平衡,值越小:网络延迟越低,但吞吐率也相对低; 默认值为0

other_config:pmd-perf-metrics=true是否使能记录PMD的详细性能数据,默认为false

other_config:pmd-rxq-assign=cycles指定接受队列如何分配到core;有三种算法:cycles/roundrobin/group。默认为cycles

other_config:pmd-rxq-isolate=true指定一个core在分配(通过pmd-rxq-affinity)一个接受队列后,是否要 isolate。若该值为false,表示允许OVS再将其他RXQ分配给该core。**默认为 true**。

other_config:emc-insert-inv-prob配置流表插入到EMC的概率(1 / emc-insert-inv-prob),1表示100%插入

other_config:vhost-postcopy-support=true没看懂,但实测发现对vhost-user端口性能有一定提升 !

对这些参数的修改主要通过以下方式(需要注意某些参数设置后需要重启OVS才会生效):

1 2 ovs-vsctl set open_vswitch . other_config:vhost-postcopy-support=true ovs-vsctl set open_vswitch . other_config:per-port-memory=true

测试说明

注:此处测试的都是原版OVS,没有经过修改 !未作特殊说明,则表示基于以下平台得到的测试结果

ovs-2.16.0

dpdk-20.11.6

处理器:i7-12700k

系统:ubuntu:22.04

内核:5.15.0-52-generic

主要对比OVS内核数据通路与DPDK数据通路的吞吐率情况,以及不同配置参数下对性能的影响。

除了OVS的配置参数,网卡支持的功能ethtool --show-features {eth0}、收发包工具及其配置参数等都可能影响最终的测试结果!

指标:接收带宽!

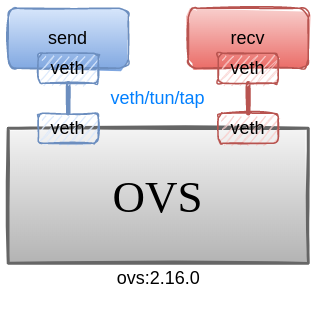

Topo1:测试不同配置参数下的性能对比。Topo2:使用其他发包工具iperf(可在veth类端口上使用)同topo1进行对比测试。Topo3:使用veth等端口时,不会启动PMD线程,测试此时的性能。Topo4:不使用DPDK,和topo3测试流程一致,两者对比。

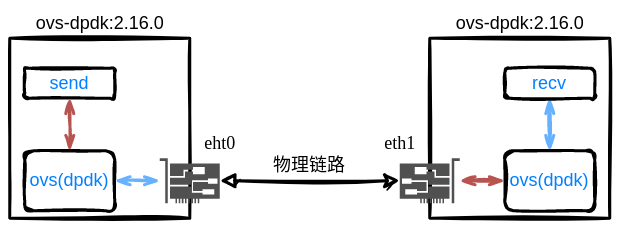

在两台主机上启动OVS,分别测试OVS/OVS-DPDK的转发能力。

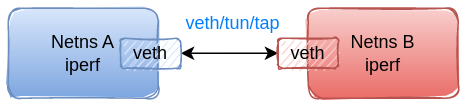

base 首先测试在不使用OVS,单独两个虚拟端口直接连接的情况下,链路 的带宽。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 sudo ip netns add A sudo ip netns add B sudo ip link add ethA type veth peer name ethB sudo ip link set dev ethA name ethA netns A up sudo ip link set dev ethB name ethB netns B up sudo ip netns exec A ifconfig ethA 10.0.0.10/24 up sudo ip netns exec B ifconfig ethB 10.0.0.11/24 up sudo ip netns exec B iperf -s -i 1 sudo ip netns exec B iperf -s -i 1 ip netns list ip netns delete A

测试结果

测试环境

iperf-tcp

c1

veth-veth直连102 Gbps

c2

docker 默认交换机docker0

79.7 Gbps

1 2 3 4 5 6 7 8 9 10 11 12 $ sudo ip netns exec A iperf -c 10.0.0.11 ------------------------------------------------------------ Client connecting to 10.0.0.11, TCP port 5001 TCP window size: 85.0 KByte (default) ------------------------------------------------------------ [ 1] local 10.0.0.10 port 44154 connected with 10.0.0.11 port 5001 [ ID] Interval Transfer Bandwidth [ 1] 0.0000-10.0099 sec 119 GBytes 102 Gbits/sec $ sudo ip netns exec B iperf -s -i 1

注:iperf也支持打udp流进行测试,但实际测出来的带宽远不如tcp,iperf TCP much faster than UDP, why? ,虽然这里说调整发包大小,但并没有用,iperf默认的报文大小就是最大值1470,调大后反而需要切片处理,导致速率更低。

从测试情况来看,应该是iperf发送端的发送速率不足,导致测出的带宽远小于tcp。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 sudo ip netns exec B iperf -s -i 1 -u sudo ip netns exec A iperf -u -c 10.0.0.11 -b 10g ------------------------------------------------------------ Client connecting to 10.0.0.11, UDP port 5001 Sending 1470 byte datagrams, IPG target: 1.18 us (kalman adjust) UDP buffer size: 208 KByte (default) ------------------------------------------------------------ [ 1] local 10.0.0.10 port 50645 connected with 10.0.0.11 port 5001 [ ID] Interval Transfer Bandwidth [ 1] 0.0000-10.0047 sec 8.37 GBytes 7.19 Gbits/sec [ 1] Sent 6113230 datagrams [ 1] Server Report: [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 1] 0.0000-10.0046 sec 8.26 GBytes 7.09 Gbits/sec 0.001 ms 88036/6123229 (1.4%)

vhost-user 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x6 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev ovs-vsctl add-port br0 vhost-user1 \ -- set Interface vhost-user1 type =dpdkvhostuser ofport_request=1 ovs-vsctl add-port br0 vhost-user2 \ -- set Interface vhost-user2 type =dpdkvhostuser ofport_request=2 ovs-vsctl add-port br0 vhost-user3 \ -- set Interface vhost-user3 type =dpdkvhostuser ofport_request=3

1

2

3

4

5

6

7

8

9

10

11

12

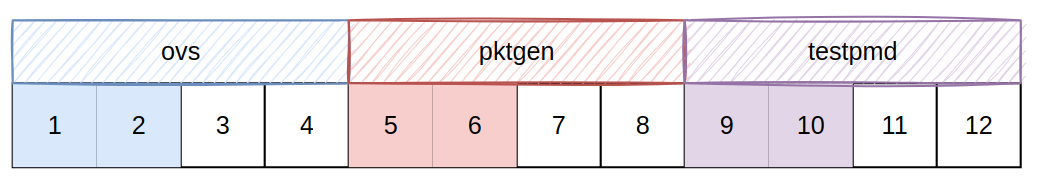

pmd=0x1*

*

pktgen=0x30*

*

testpmd=0x300*

*

pktgen和testpmd好像也采用了PMD轮询驱动类似的方式运行,会占满某些核,在配置参数时需要将core错开,否则影响性能!

1 2 3 4 5 6 7 8 9 docker run -it --rm --privileged --name=app-pktgen \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash pktgen -c 0x30 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1' \ -- -T -P -m "5.0"

1 2 3 4 5 6 7 8 9 docker run -it --rm --privileged --name=app-testpmd \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash dpdk-testpmd -c 0x300 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2' \ -- -i -a --coremask=0x200 --forward-mode=rxonly

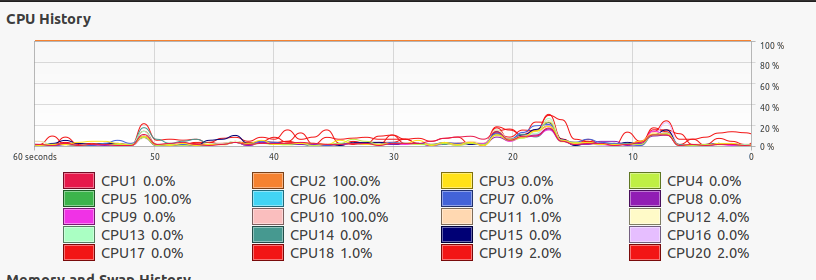

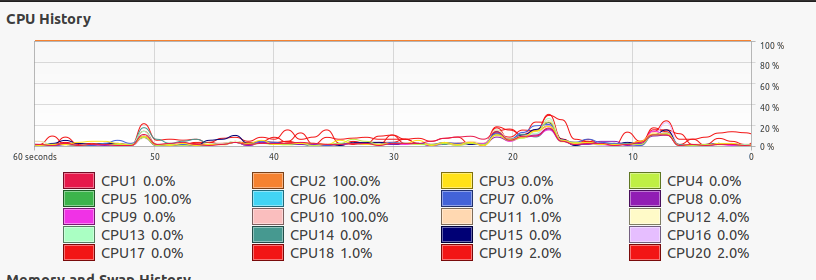

可以看到CPU的占用情况与配置一致!Pktgen占用了两个核。

测试结果

pktgen-64byte

pktgen-1500byte

c1

默认流表 Normal

3 016 608 216 bps

42 754 318 664 bps

6 284 600 pps

3 572 386 pps

c2

c1 + 匹配入端口流表

7 849 813 576 bps

88 330 499 920 bps

16 353 825 pps

7 380 556 pps

c3

c2 + vhost-postcopy-support

8 086 507 608 bps

96 284 271 416 bps

16 846 890 pps

8 045 142 pps

下面的2send,1recv, 2pmd

125975200120 bps

10 525 949 pps

c2:配置匹配入端口的流表;

1 2 3 ovs-ofctl add-flow br0 in_port=vhost-user1,actions=output:vhost-user2 ovs-ofctl add-flow br0 in_port=vhost-user3,actions=output:vhost-user4 ovs-ofctl add-flow br0 in_port=vhost-user5,actions=output:vhost-user6

c3:配置vhost-postcopy-support=true;

1 ovs-vsctl set open_vswitch . other_config:vhost-postcopy-support=true

2send-1recv 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0xE ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev ovs-vsctl add-port br0 vhost-user1 \ -- set Interface vhost-user1 type =dpdkvhostuser ofport_request=1 ovs-vsctl add-port br0 vhost-user2 \ -- set Interface vhost-user2 type =dpdkvhostuser ofport_request=2 ovs-vsctl add-port br0 vhost-user3 \ -- set Interface vhost-user3 type =dpdkvhostuser ofport_request=3 ovs-vsctl add-port br0 vhost-user4 \ -- set Interface vhost-user4 type =dpdkvhostuser ofport_request=4 ovs-vsctl add-port br0 vhost-user5 \ -- set Interface vhost-user5 type =dpdkvhostuser ofport_request=5 ovs-vsctl add-port br0 vhost-user6 \ -- set Interface vhost-user6 type =dpdkvhostuser ofport_request=6

1

2

3

4

5

6

7

8

9

10

11

12

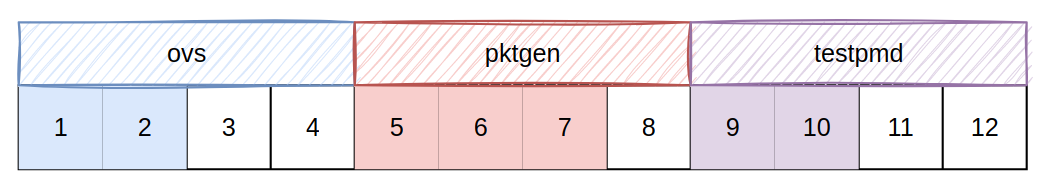

pmd=0x6*

*

pktgen1=0x30*

*

pktgen2=0xc0*

*

testpmd=0x300*

*

testpmd=0xc00*

*

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 docker run -it --rm --privileged --name=app-pktgen3 \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen:20.11.3 /bin/bash pktgen -c 0x30 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1' \ -- -T -P -m "5.0" docker run -it --rm --privileged --name=app-pktgen2 \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash pktgen -c 0xc0 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:03,path=/var/run/openvswitch/vhost-user3' \ -- -T -P -m "7.0" pktgen -c 0xc000 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:05,path=/var/run/openvswitch/vhost-user5' \ -- -T -P -m "15.0" pktgen -c 0xc00 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:04,path=/var/run/openvswitch/vhost-user4' \ -- -T -P -m "11.0" sequence <seq sequence 0 0 dst 00:00:00:00:11:11 src 00:00:00:00:22:22 dst 192.168.1.1/24 src 192.168.1.3/24 sport 10001 dport 10003 ipv4 udp vlan 3000 size 1500 sequence 2 0 dst 00:00:00:00:11:11 src 00:00:00:00:22:22 dst 192.168.1.1/24 src 192.168.1.4/24 sport 10001 dport 10004 ipv4 udp vlan 4000 size 1500 sequence <seq sequence 1 0 dst-Mac 00:00:00:00:11:11 src-Mac 00:00:00:00:22:22 dst-IP 192.168.1.1 src-IP 192.168.1.3/24 set 0 dst ip min 192.168.1.1set 0 dst ip max 192.168.1.2ovs-ofctl add-flow br0 ip,nw_dst=192.168.1.1,actions=output:vhost-user2 ovs-ofctl add-flow br0 ip,nw_dst=192.168.1.2,actions=output:vhost-user4

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 docker run -it --rm --privileged --name=app-testpmd2 \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen:20.11.3 /bin/bash dpdk-testpmd -c 0x300 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2' \ -- -i -a --coremask=0x200 --forward-mode=rxonly docker run -it --rm --privileged --name=app-testpmd2 \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash docker run -it --rm --privileged --name=pktgen3 \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen:20.11.3 /bin/bash dpdk-testpmd -c 0xc00 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user4,mac=00:00:00:00:00:04,path=/var/run/openvswitch/vhost-user4' \ -- -i -a --coremask=0x800 --forward-mode=rxonly dpdk-testpmd -c 0x30000 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user6,mac=00:00:00:00:00:06,path=/var/run/openvswitch/vhost-user6' \ -- -i -a --coremask=0x20000 --forward-mode=rxonly

1 2 ovs-ofctl add-flow br0 in_port=vhost-user1,actions=output:vhost-user2 ovs-ofctl add-flow br0 in_port=vhost-user3,actions=output:vhost-user2

1 2 3 4 5 6 1-------3 pmd1 2-------4 1---pmd1----3 2---pmd2----4

服务器 1500byte,收端测速

pps

bps

1pmd, 1->2

4014497

48 045502408

1pmd, 1->2, 3->2

3821682

45 737967984

1pmd, 1->2, 3->4, (2,4的结果差不多)

1842978

22 056772136

2pmd, 1->2, 3->2

5644703

67 485371880

2pmd, 1->2, 3->4, (2,4的结果差不多)

3983405

47 673395952

2pmd, 1->2, 3->2, 4->2

5347450

63 864672136

3pmd, 1->2, 3->2, 4->2 (应该是收端处理能力限制)

5332282

63 816064880

3pmd, 1->2, 3->4, 5->6 (2,4,6的结果差不多)

3733353

44 680773688

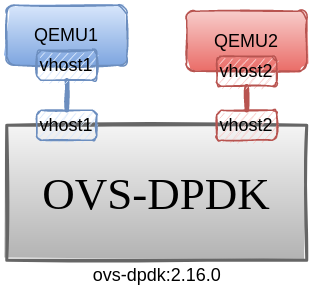

QEMU 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev ovs-vsctl add-port br0 vhost-user1 \ -- set Interface vhost-user1 type =dpdkvhostuser ofport_request=1 ovs-vsctl add-port br0 vhost-user2 \ -- set Interface vhost-user2 type =dpdkvhostuser ofport_request=2

使用QEMU虚拟机

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 qemu-system-x86_64 -enable-kvm -m 2048 -smp 4 \ -chardev socket,id =char0,path=/usr/local/var/run/openvswitch/vhost-user1 \ -netdev type =vhost-user,id =mynet1,chardev=char0,vhostforce \ -device virtio-net-pci,netdev=mynet1,mac=52:54:00:02:d9:01 \ -object memory-backend-file,id =mem,size=2048M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem -mem-prealloc \ -net user,hostfwd=tcp::10021-:22 -net nic \ ./qemu-vm1.img qemu-system-x86_64 -enable-kvm -m 2048 -smp 4 \ -chardev socket,id =char0,path=/usr/local/var/run/openvswitch/vhost-user2 \ -netdev type =vhost-user,id =mynet1,chardev=char0,vhostforce \ -device virtio-net-pci,netdev=mynet1,mac=52:54:00:02:d9:02 \ -object memory-backend-file,id =mem,size=2048M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem -mem-prealloc \ -net user,hostfwd=tcp::10022-:22 -net nic \ ./qemu-vm2.img &

测试结果

iperf-tcp

c1

默认流表 Normal

22.3 Gbps

c2

c1 + 匹配入端口流表

22.4 Gbps

c3

c2 + vhost-postcopy-support

22.3 Gbps

这三种情况下,速率几乎一致!而且使用iperf-udp测试时,指定带宽小于5Gbps时,测试速率比较正常。但若指定的带宽大于5g,会导致速率严重下降,为1.5Gbps左右。

不过这里也涉及到配置qemu虚拟机的相关参数,没进一步调试。

物理网卡

这里为在两台物理服务器上做测试,每台服务器用两张网卡,每张网卡两个端口,一张网卡为光口,另一张则是正常的RJ45接口。除一个RJ45口接入网络外,其余端口都两两直连。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ lspci | grep Eth 3d:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09) 3d:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09) af:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) af:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) $ uname -a Linux uestc 5.15.0-52-generic $ lscpu Model name: Intel(R) Xeon(R) Gold 6133 CPU @ 2.50GHz Stepping: 4 CPU MHz: 1000.000 CPU max MHz: 3000.0000 CPU min MHz: 1000.0000 BogoMIPS: 5000.00 ----------------------------------------------- Model name: Intel(R) Xeon(R) Gold 6145 CPU @ 2.00GHz Stepping: 4 CPU MHz: 1000.120 CPU max MHz: 3700.0000 CPU min MHz: 1000.0000 BogoMIPS: 4000.00

ovs 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=system ifconfig br0 192.168.5.80 ovs-vsctl add-port br0 eno0 ovs-ofctl add-flow br0 ip,nw_dst=192.168.5.40,actions=output:eno0 ovs-ofctl add-flow br0 ip,nw_dst=192.168.5.80,actions=output:LOCAL ifconfig br0 192.168.5.40 ovs-vsctl add-port br0 eno1 ovs-ofctl add-flow br0 ip,nw_dst=192.168.5.80,actions=output:eno1 ovs-ofctl add-flow br0 ip,nw_dst=192.168.5.40,actions=output:LOCAL

ovs-dpdk 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x2 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br1 -- set bridge br1 datapath_type=netdev ifconfig br1 192.168.6.80 modprobe vfio enable_unsafe_noiommu_mode=1 echo 1 > /sys/module/vfio/parameters/enable_unsafe_noiommu_modeifconfig eno0 down ifconfig ens1f0 down ifconfig ens1f1 down dpdk-devbind.py --bind =vfio-pci 0000:60:00.0 dpdk-devbind.py --bind =vfio-pci 0000:18:00.0 dpdk-devbind.py --bind =vfio-pci 0000:18:00.1 ovs-vsctl add-port br1 rj45 \ -- set Interface rj45 type =dpdk options:dpdk-devargs=0000:60:00.0 ovs-ofctl add-flow br1 ip,nw_dst=192.168.6.40,actions=output:rj45 ovs-ofctl add-flow br1 ip,nw_dst=192.168.6.80,actions=output:LOCAL ifconfig br1 192.168.4.80 modprobe vfio enable_unsafe_noiommu_mode=1 echo 1 > /sys/module/vfio/parameters/enable_unsafe_noiommu_modeifconfig eno1 down ifconfig ens802f0 down ifconfig ens802f1 down dpdk-devbind.py --bind =vfio-pci 0000:3d:00.0 dpdk-devbind.py --bind =vfio-pci 0000:af:00.0 dpdk-devbind.py --bind =vfio-pci 0000:af:00.1 ovs-vsctl add-port br1 rj45 \ -- set Interface rj45 type =dpdk options:dpdk-devargs=0000:3d:00.0 ovs-ofctl add-flow br1 ip,nw_dst=192.168.6.80,actions=output:rj45 ovs-ofctl add-flow br1 ip,nw_dst=192.168.6.40,actions=output:LOCAL

测试结果

iperf-tcp

c1

无OVS,网口直连(RJ45与光口差不多)

9.38 Gbps

c2

OVS,RJ45与光口同样差不多

9.41 Gbps

c3

OVS-DPDK,(不知道为什么这么慢)

3.89 Gbps

c4

OVS-DPDK-pktgen

9.8 Gbps

1 2 3 4 5 ovs-ofctl add-flow br1 in_port=vhost-user1,actions=output:g1 ovs-ofctl add-flow br1 in_port=g1,actions=output:vhost-user1

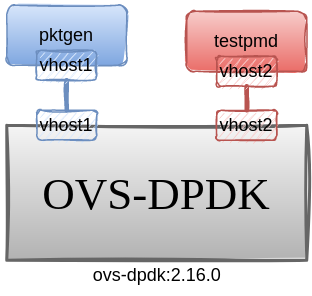

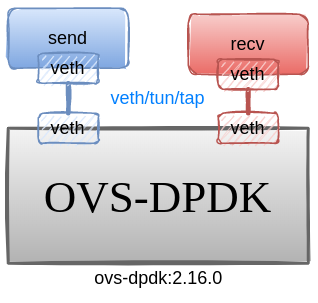

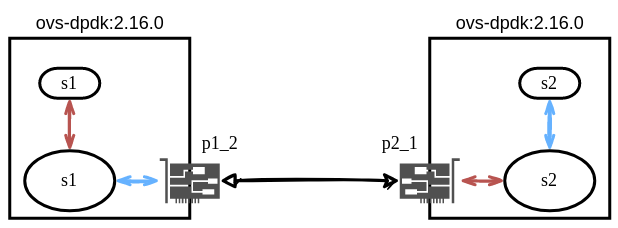

vhost-user OVS-OVS 补:该方案使用veth pair连接两容器,本想借此测试OVS-DPDK的转发性能,但使用veth端口会让OVS回落到system datapath ,而且搭建下面的拓扑并启动OVS后,会发现没有核被占满(PMD运行时不会休眠)。当添加了vhost-user端口后,才会看到有个核跑满了。

拓扑搭建 :

1 2 3 4 5 6 7 8 9 10 11 12 13 dpdk-hugepages.py -p 1G --setup 4G modprobe openvswitch sudo docker create -it --name=s1 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-qdisc sudo docker create -it --name=s2 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-qdisc sudo docker start s1 sudo docker start s2 sudo ip link add p1_2 type veth peer name p2_1 > /dev/null sudo ip link set dev p1_2 name p1_2 netns $(sudo docker inspect -f '{{.State.Pid}}' s1) up sudo ip link set dev p2_1 name p2_1 netns $(sudo docker inspect -f '{{.State.Pid}}' s2) up

启动OVS :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 docker exec -it s1 bash ovs-ctl --no-ovs-vswitchd start --system-id=1001 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s1 -- set bridge s1 datapath_type=netdev ifconfig s1 192.168.1.12 netmask 255.255.255.0 up ovs-vsctl add-port s1 p1_2 -- set Interface p1_2 ofport_request=12 docker exec -it s2 bash ovs-ctl --no-ovs-vswitchd start --system-id=1002 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x08 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s2 -- set bridge s2 datapath_type=netdev ifconfig s2 192.168.1.21 netmask 255.255.255.0 up ovs-vsctl add-port s2 p2_1 -- set Interface p2_1 ofport_request=21

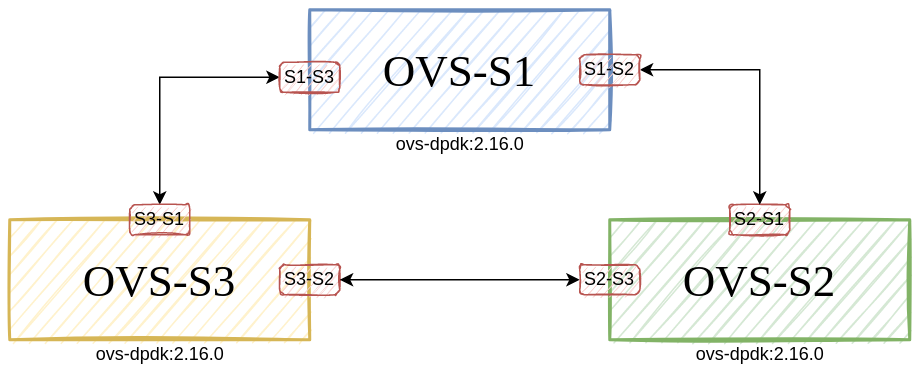

OVS-OVS-OVS

拓扑搭建 :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 dpdk-hugepages.py -p 1G --setup 6G modprobe openvswitch sudo docker create -it --name=s1 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-vlog sudo docker create -it --name=s2 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-vlog sudo docker create -it --name=s3 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-vlog sudo docker start s1 s2 s3 sudo ip link add s1_2 type veth peer name s2_1 > /dev/null sudo ip link add s3_2 type veth peer name s2_3 > /dev/null sudo ip link add s1_3 type veth peer name s3_1 > /dev/null sudo ip link set dev s1_2 name s1_2 netns $(sudo docker inspect -f '{{.State.Pid}}' s1) up sudo ip link set dev s1_3 name s1_3 netns $(sudo docker inspect -f '{{.State.Pid}}' s1) up sudo ip link set dev s2_1 name s2_1 netns $(sudo docker inspect -f '{{.State.Pid}}' s2) up sudo ip link set dev s2_3 name s2_3 netns $(sudo docker inspect -f '{{.State.Pid}}' s2) up sudo ip link set dev s3_1 name s3_1 netns $(sudo docker inspect -f '{{.State.Pid}}' s3) up sudo ip link set dev s3_2 name s3_2 netns $(sudo docker inspect -f '{{.State.Pid}}' s3) up

启动OVS :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 docker exec -it s1 bash ovs-ctl --no-ovs-vswitchd start --system-id=1001 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s1 -- set bridge s1 datapath_type=netdev ovs-vsctl set bridge s1 other_config:hwaddr="00:00:00:00:10:01" ifconfig s1 192.168.10.1 netmask 255.255.255.0 up ovs-vsctl add-port s1 s1_2 -- set Interface s1_2 ofport_request=12 ovs-vsctl add-port s1 s1_3 -- set Interface s1_3 ofport_request=13 docker exec -it s2 bash ovs-ctl --no-ovs-vswitchd start --system-id=1002 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x08 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s2 -- set bridge s2 datapath_type=netdev ovs-vsctl set bridge s2 other_config:hwaddr="00:00:00:00:10:02" ifconfig s2 192.168.10.2 netmask 255.255.255.0 up ovs-vsctl add-port s2 s2_1 -- set Interface s2_1 ofport_request=21 ovs-vsctl add-port s2 s2_3 -- set Interface s2_3 ofport_request=23 docker exec -it s3 bash ovs-ctl --no-ovs-vswitchd start --system-id=1002 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x20 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s3 -- set bridge s3 datapath_type=netdev ovs-vsctl set bridge s3 other_config:hwaddr="00:00:00:00:10:03" ifconfig s3 192.168.10.3 netmask 255.255.255.0 up ovs-vsctl add-port s3 s3_1 -- set Interface s3_1 ofport_request=31 ovs-vsctl add-port s3 s3_2 -- set Interface s3_2 ofport_request=32

转发流表 :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.1,actions=output:LOCAL ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.2,actions=output:s1_2 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.3,actions=output:s1_3 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.2,actions=output:LOCAL ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.1,actions=output:s2_1 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.3,actions=output:s2_3 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.3,actions=output:LOCAL ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.1,actions=output:s3_1 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.2,actions=output:s3_2 arp -s 192.168.10.1 00:00:00:00:10:01 arp -s 192.168.10.2 00:00:00:00:10:02 arp -s 192.168.10.3 00:00:00:00:10:03

转发流表2 :1<->2<->3 (1与3不直连)

1 2 3 4 5 6 7 8 9 10 11 12 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.1,actions=output:LOCAL ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.2,actions=output:s1_2 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.3,actions=output:s1_2 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.2,actions=output:LOCAL ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.1,actions=output:s2_1 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.3,actions=output:s2_3 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.3,actions=output:LOCAL ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.1,actions=output:s3_2 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.2,actions=output:s3_2

测试ATS 1 2 3 4 5 6 7 8 9 ovs-vsctl set interface s1_2 other_config:qdisc-params="rate:1=10000" ovs-appctl vlog/set dpif_qdisc:file:dbg ovs-vsctl set interface s1_2 other_config:qdisc=ATS ovs-vsctl set interface s2_3 other_config:qdisc=ATS ovs-vsctl set interface s2_1 other_config:qdisc=ATS ovs-vsctl set interface s3_2 other_config:qdisc=ATS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 docker exec -it s1 bash ovs-ctl --no-ovs-vswitchd start --system-id=1001 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s1 -- set bridge s1 datapath_type=netdev ovs-vsctl set bridge s1 other_config:hwaddr="00:00:00:00:10:01" ifconfig s1 192.168.10.1 netmask 255.255.255.0 up ovs-vsctl add-port s1 s1_2 -- set Interface s1_2 ofport_request=12 ovs-vsctl add-port s1 s1_3 -- set Interface s1_3 ofport_request=13 arp -s 192.168.10.1 00:00:00:00:10:01 arp -s 192.168.10.2 00:00:00:00:10:02 arp -s 192.168.10.3 00:00:00:00:10:03 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.1,actions=output:LOCAL ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.2,actions=output:s1_2 ovs-ofctl add-flow s1 ip,nw_dst=192.168.10.3,actions=output:s1_2 docker exec -it s2 bash ovs-ctl --no-ovs-vswitchd start --system-id=1002 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x08 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-appctl vlog/set dpif_qdisc:file:dbg ovs-vsctl add-br s2 -- set bridge s2 datapath_type=netdev ovs-vsctl set bridge s2 other_config:hwaddr="00:00:00:00:10:02" ifconfig s2 192.168.10.2 netmask 255.255.255.0 up ovs-vsctl add-port s2 s2_1 -- set Interface s2_1 ofport_request=21 ovs-vsctl add-port s2 s2_3 -- set Interface s2_3 ofport_request=23 arp -s 192.168.10.1 00:00:00:00:10:01 arp -s 192.168.10.2 00:00:00:00:10:02 arp -s 192.168.10.3 00:00:00:00:10:03 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.2,actions=output:LOCAL ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.1,actions=output:s2_1 ovs-ofctl add-flow s2 ip,nw_dst=192.168.10.3,actions=output:s2_3 docker exec -it s3 bash ovs-ctl --no-ovs-vswitchd start --system-id=1002 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x20 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br s3 -- set bridge s3 datapath_type=netdev ovs-vsctl set bridge s3 other_config:hwaddr="00:00:00:00:10:03" ifconfig s3 192.168.10.3 netmask 255.255.255.0 up ovs-vsctl add-port s3 s3_1 -- set Interface s3_1 ofport_request=31 ovs-vsctl add-port s3 s3_2 -- set Interface s3_2 ofport_request=32 arp -s 192.168.10.1 00:00:00:00:10:01 arp -s 192.168.10.2 00:00:00:00:10:02 arp -s 192.168.10.3 00:00:00:00:10:03 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.3,actions=output:LOCAL ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.1,actions=output:s3_2 ovs-ofctl add-flow s3 ip,nw_dst=192.168.10.2,actions=output:s3_2

veth-OVS-veth 1 2 3 4 5 6 7 8 9 10 11 sudo docker create -it --name=s1 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-vlog sudo docker create -it --name=s2 --privileged -v /dev/hugepages:/dev/hugepages -v /root/run:/root/run ovs-dpdk:2.16.0-vlog sudo docker start s1 s2 sudo ip link add sh_1 type veth peer name s1_h > /dev/null sudo ip link add sh_2 type veth peer name s2_h > /dev/null sudo ip link set dev s1_h name s1_h netns $(sudo docker inspect -f '{{.State.Pid}}' s1) up sudo ip link set dev s2_h name s2_h netns $(sudo docker inspect -f '{{.State.Pid}}' s2) up ifconfig sh_1 up ifconfig sh_2 up

1 2 3 4 5 6 7 8 9 docker exec -it s1 bash ifconfig s1_h 192.168.10.1 ip link set dev s1_h address 00:00:00:00:10:01 arp -s 192.168.10.2 00:00:00:00:10:02 docker exec -it s2 bash ifconfig s2_h 192.168.10.2 ip link set dev s2_h address 00:00:00:00:10:02 arp -s 192.168.10.1 00:00:00:00:10:01

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev ovs-vsctl add-port br0 sh_1 -- set Interface sh_1 ofport_request=11 ovs-vsctl add-port br0 sh_2 -- set Interface sh_2 ofport_request=12 ovs-ofctl add-flow br0 ip,nw_dst=192.168.10.1,actions=output:sh_1 ovs-ofctl add-flow br0 ip,nw_dst=192.168.10.2,actions=output:sh_2 ovs-vsctl set interface sh_1 other_config:qdisc=ATS ovs-vsctl set interface sh_2 other_config:qdisc=ATS

PKTGEN-OVS-TESTPMD

由于上面的拓扑存在连接问题,只好用单节点测试OVS的速率与PMD的关系,这里OVS就直接部署在主机上,pktgen和testpmd放置在Docker中运行。

拓扑搭建 :基本拓扑搭建方法如下,但实际测试时会修改参数!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 rm /usr/local/etc/openvswitch/*rm /usr/local/var/run/openvswitch/*rm /usr/local/var/log/openvswitch/*ovs-ctl --no-ovs-vswitchd start --system-id=random ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x02 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024" ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-ctl --no-ovsdb-server --db-sock="$DB_SOCK " start ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev ovs-vsctl add-port br0 vhost-user1 \ -- set Interface vhost-user1 type =dpdkvhostuser ofport_request=1 ovs-vsctl add-port br0 vhost-user2 \ -- set Interface vhost-user2 type =dpdkvhostuser ofport_request=2 docker run -it --rm --privileged --name=app-pktgen \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash pktgen -c 0x19 --main-lcore 3 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1' \ -- -T -P -m "0.0" docker run -it --rm --privileged --name=app-testpmd \ -v /dev/hugepages:/dev/hugepages \ -v /usr/local/var/run/openvswitch:/var/run/openvswitch \ pktgen20:latest /bin/bash dpdk-testpmd -c 0xE0 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2' \ -- -i -a --coremask=0xc0 --forward-mode=rxonly

参数说明 :

pmd-cpu-mask:指定在哪些核上面绑定PMD线程,即指定了PMD创建的数量。

dpdk-lcore-mask:指定在哪些核上创建lcore 线程,该线程用于 dpdk 库内部消息处理,如日志等。

-c:指定在哪些核上运行Pktgen发包或接收线程。

-m:指定核与端口的对应关系,注意这里的编号从0开始数。

1 2 3 4 5 6 7 “1.0, 2.1, 3.2” - core 1 handles port 0 rx/tx, core 2 handles port 1 rx/tx core 3 handles port 2 rx/tx 1.[0-2], 2.3, ... - core 1 handle ports 0,1,2 rx/tx, core 2 handle port 3 rx/tx [1:2].0, [4:6].1, ... - core 1 handles port 0 rx, core 2 handles port 0 tx,

--coremask=0xXX:指定在哪些核上运行包转发。

测试1 CPU为 i7-12700k,系统为 Ubuntu 20.04!

1 2 3 4 5 6 7 8 9 10 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x2 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 pktgen -c 0x30 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1' \ -- -T -P -m "5.0" dpdk-testpmd -c 0x300 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2' \ -- -i -a --coremask=0x200 --forward-mode=rxonly

可以看到CPU的占用情况与配置一致!Pktgen占用了两个核。

未配置流表的情况下的速率与配置流表后的速率明显有区别 !

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 testpmd> show port stats all Throughput (since last show) Rx-pps: 6284600 Rx-bps: 3 016 608 216 Tx-pps: 0 Tx-bps: 0 testpmd> show port stats all Throughput (since last show) Rx-pps: 3572386 Rx-bps: 42 754 318 664 Tx-pps: 0 Tx-bps: 0

1 2 3 ovs-ofctl add-flow br0 in_port=vhost-user1,actions=output:vhost-user2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 testpmd> show port stats all Throughput (since last show) Rx-pps: 16353825 Rx-bps: 7 849 813 576 Tx-pps: 0 Tx-bps: 0 testpmd> show port stats all Throughput (since last show) Rx-pps: 7380556 Rx-bps: 88 330 499 920 Tx-pps: 0 Tx-bps: 0

测试2

上面设置流表后,转发速率有了很多提升,但瓶颈在哪一个阶段(发包、转发、收包)还不确定,可能仍然在转发这里,因此先测试启用两个PMD线程后的速率!

1 2 3 4 5 6 7 8 9 10 11 12 ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=0x6 ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0x1 ovs-vsctl set Interface vhost-user1 options:n_rxq=2 pktgen -c 0x30 -n 1 --socket-mem 1024 --file-prefix pktgen --no-pci \ --vdev 'net_virtio_user1,mac=00:00:00:00:00:01,path=/var/run/openvswitch/vhost-user1' \ -- -T -P -m "5.0" dpdk-testpmd -c 0x300 -n 1 --socket-mem 1024 --file-prefix testpmd --no-pci \ --vdev 'net_virtio_user2,mac=00:00:00:00:00:02,path=/var/run/openvswitch/vhost-user2' \ -- -i -a --coremask=0x200 --forward-mode=rxonly

根据日志,确实启用了2个PMD线程,但并没有将vhost-user1设置为双接收队列!按照文档中的描述n_rxq Not supported by DPDK vHost interfaces. 但是在Intel 的文档 中也是用此参数设置的!

1 2 3 4 5 6 2022-07-03T09:32:20.070Z|00075|dpif_netdev|INFO|PMD thread on numa_id: 0, core id : 1 created. 2022-07-03T09:32:20.073Z|00076|dpif_netdev|INFO|PMD thread on numa_id: 0, core id : 2 created. 2022-07-03T09:32:20.073Z|00077|dpif_netdev|INFO|There are 2 pmd threads on numa node 0 ... 2022-07-03T09:32:20.191Z|00086|dpif_netdev|INFO|Core 1 on numa node 0 assigned port 'vhost-user1' rx queue 0 (measured processing cycles 0). 2022-07-03T09:32:20.191Z|00087|dpif_netdev|INFO|Core 2 on numa node 0 assigned port 'vhost-user2' rx queue 0 (measured processing cycles 0).

由于一个接收队列只能交给一个PMD处理,所以这里虽然设置两个PMD,但仍然只有一个PMD在转发包,虽然相比之前,不用去轮询vhost-user2端口上的队列,不过不知道轮询一个空队列的开销大小。

此情况下,实际测得接收速率仍然为 88 Gbps,故仍然不确定瓶颈在哪!

1 2 3 4 5 6 7 testpmd> show port stats all Throughput (since last show) Rx-pps: 7421329 Rx-bps: 88818467544 Tx-pps: 0 Tx-bps: 0

相关资料 Open_vSwitch 守护进程配置 CPU socket 查看网卡归属哪个CPU socket OVS DPDK设备内存模型

.png)